When prototypes outrun the enterprise

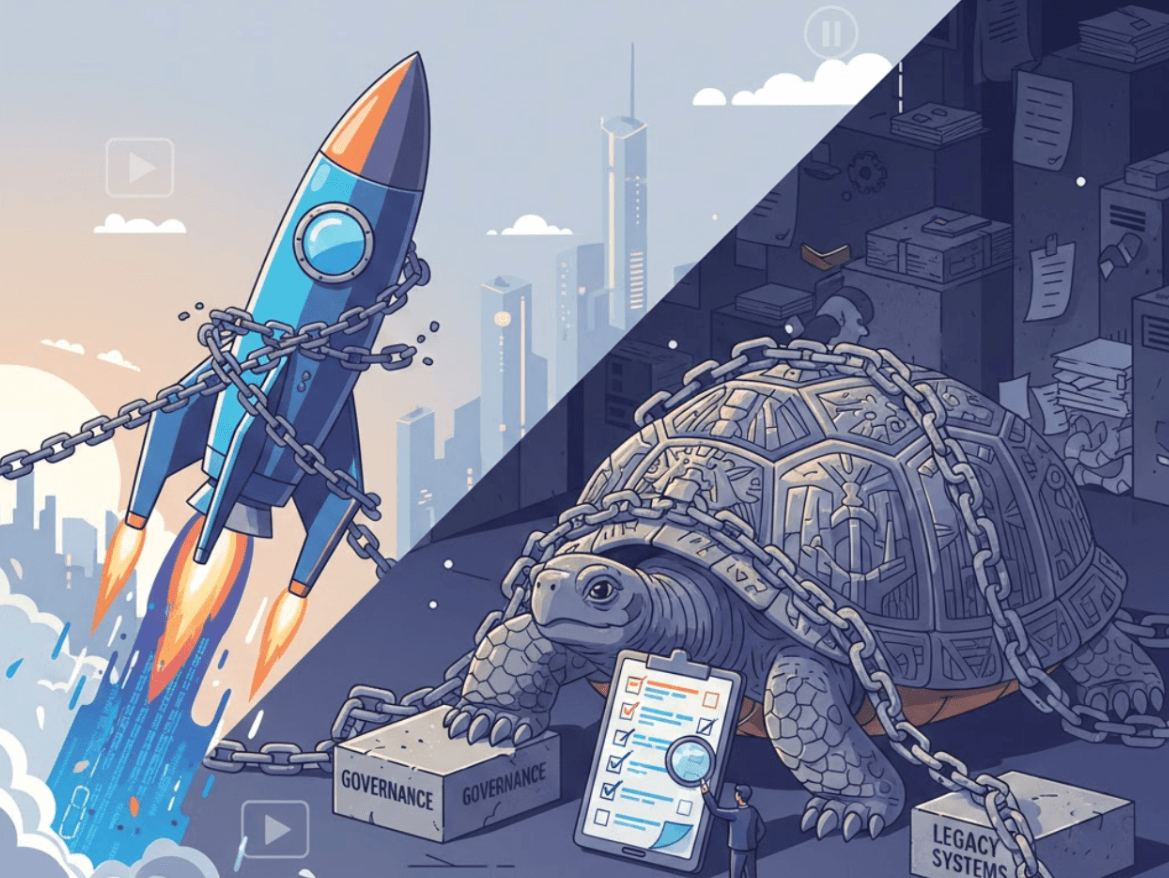

Agentic AI prototypes move far faster than traditional enterprise governance, exposing how legacy processes turn speed into risk when verification cannot keep up. In the AI era, organisations need to replace control-heavy planning with fast, learning-driven governance, because slowness has become the biggest risk.

When prototypes outrun the enterprise

Introduction

Prototypes sprint; enterprises stroll. Put them in the same room and you get a strange physics experiment where a two-hour demo collapses the delivery model. The prototype moves like it stole something. The enterprise moves like it is waiting for permission to walk. The energy is intoxicating and the speed is irresistible. The gap between idea and execution suddenly feels manageable. Until governance walks in with a multi-step checklist and the whole thing snaps back to (corporate) reality, complete with colour-coded risk registers.

One client told us, “We built something in a few hours and spent four weeks deciding if we were allowed to look at it.” That line has become a running joke and a running diagnosis. Agentic AI is accelerating this divide. It exposes how brittle legacy process logic has become, and it forces organisations to confront a future where speed and accuracy are not trade-offs, they are defaults. You don’t pick one anymore. You’re expected to run with both.

The dark side of vibe coding: The "Sarah Johnson" problem

Speed has a cost, and nowhere is that cost more visible than in the rise of Sarah Johnson.

We’ve all seen Sarah. On one website, she is the CEO of TechStart Inc. On another, she is the HR Director. She is often joined by "Michael Chen," a man who seems to be the Marketing Director of three different global corporations simultaneously.

Sarah and Michael are not rogue employees, but placeholders. They are the default personas embedded in the AI vibe-coding tools that teams use to spin up products in record time.

In a healthy system, Sarah is swapped out for a real human before launch. But in the current environment, where the prototype becomes the production system simply because it is the only thing moving fast enough, Sarah survives. The vibe makes it into production, but the truth doesn’t.

We are seeing enterprise tools launched with fake testimonials, exaggerated performance stats ("250% increase in engagement!"), and invented stakeholders. This isn't just sloppy; in many jurisdictions, it is a violation of consumer protection laws.

This is the dark side of the governance mismatch. When speed outpaces the ability to verify, you get some bugs. And you get unintended fraud. You end up staging a play instead of building a business.

The competence test every enterprise is now taking

Many organisations view rogue agents, dashboards built in an afternoon or hyper-speed prototypes, as a threat to stability. They are wrong. These artefacts are not destabilising the organisation. They are diagnosing it.

As we observed in retail, AI agents are not a threat, they are a competence test. They measure your organisation’s ability to change with speed. The old governance model assumes work moves like a waterfall: linear, predictable, gateable. Agentic AI moves like a network: messy, adaptive, compounding.

If your governance requires a “meeting about the meeting” to approve a system that learns on its own, you aren’t managing risk, you are manufacturing obsolescence. Most companies still believe that stability is something you achieve through control but agentic systems show that stability increasingly comes from iteration, learning, and connected feedback loops.

Why old software logic is cracking in real time

Enterprise IT was built for standardisation. Predictable workflows. Known contracts. Clean handoffs. It was cathedral architecture: methodical, impressive, slow to change.

Agentic AI works more like a street market: dynamic, adaptive, exchanging value in real time. Enterprise IT expects systems to be fully defined before they change. Agents evolve them until they’re useful. This is the real collapse of old software logic.

- Old logic: “Design everything up front, then deploy.”

- Agentic logic: “Ship it with guardrails, iterate fast, embed learning.”

The pioneers don’t scale prototypes. They scale learning. They use our Gladiator Methodology, not because the name is fun (though it is), but because it reflects reality, where value must be fought for and won quickly, not courted politely through quarters of documentation. This is where you will find your “Sarah Johnson” before going live.

Plans, reviews, and committees used to be the defence against uncertainty. But AI changes this because the new risk is slowness, not speed. The new failure mode is stagnation, not experimentation.

What enterprises should do now

Stop treating AI like a compliance problem and start treating it like compound interest. The sooner you apply it, the faster the returns accumulate. Start in simple use-cases, learn, generate new data, explore, and compound. Waiting for perfect conditions is now the riskiest move you can make.

You do not need a master plan. You need two connected use cases and a system that learns between them. That’s the starting point. Everything else compounds from there. What to do next:

- Build a prototype, then immediately connect it to real data and a real workflow. Not a sandbox version, the real one.

- Shrink governance cycles from weeks to days by starting safe and validating through controlled usage, not endless documentation.

- Replace static documents with live agent behaviour as your source of truth.

- Let agents teach the organisation how to operate instead of waiting for alignment sessions that never quite align.

As one client said, “Once the agents started sharing what they learned, the roadmap wrote itself.”